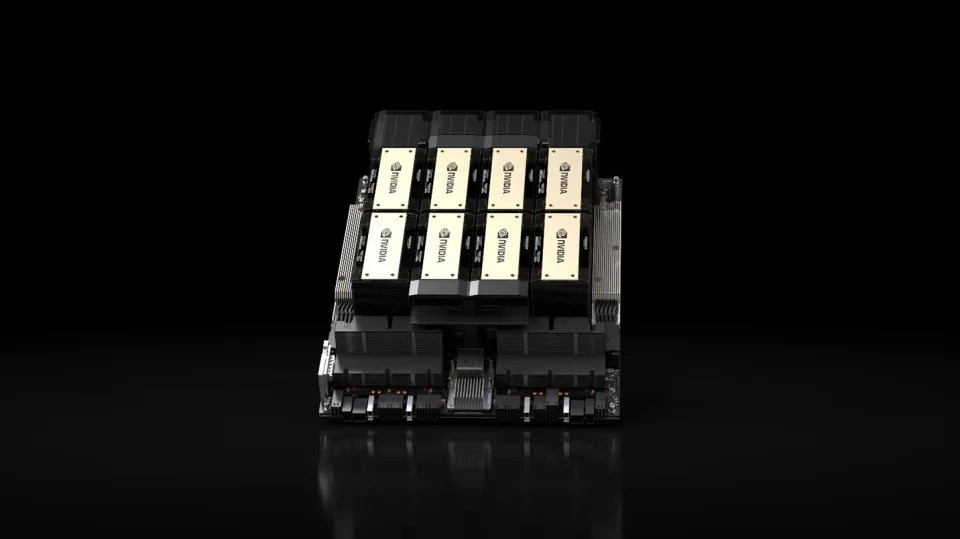

NVIDIA's next generation of AI supercomputer chips is here

NVIDIA has launched its next-generation of AI supercomputer chips that will likely play a large role in deep learning and large language models (LLMs), weather and climate prediction, drug discovery, quantum computing and more.

Next-generation of AI supercomputer chips are more capable, of course, but they're also geared more toward AI and LLMs. The technology represents a significant leap over the last generation and is poised to be used in data centres and supercomputers — working on tasks like weather and climate prediction, drug discovery, quantum computing and more.

The key product is the HGX H200 GPU based on NVIDIA's "Hopper" architecture, a replacement for the popular H100 GPU. It's the company's first chip to use HBM3e memory that's faster and has more capacity, thus making it better suited for large language models. "With HBM3e, the NVIDIA H200 delivers 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the NVIDIA A100," the company wrote.

In terms of benefits for AI, NVIDIA says the HGX H200 doubles the inference speed on Llama 2, a 70 billion-parameter LLM, compared to the H100. It'll be available in 4- and 8-way configurations that are compatible with both the software and hardware in H100 systems. It'll work in every type of data center, (on-premises, cloud, hybrid-cloud and edge), and be deployed by Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure, among others. It's set to arrive in Q2 2024.

NVIDIA's other key product is the GH200 Grace Hopper "superchip" that is designed for supercomputers to allow "scientists and researchers to tackle the world’s most challenging problems by accelerating complex AI and HPC applications running terabytes of data," NVIDIA wrote.